python爬虫——爬取房天下

python爬虫——爬取房天下

话不多说,直接上代码!

import requests as reqimport timeimport pandas as pdfrom bs4 import BeautifulSoupfrom sqlalchemy import create_engineglobal infodef getHouseInfo(url):info = { }soup = BeautifulSoup(req.get(url).text,"html.parser")resinfo = soup.select(".tab-cont-right .trl-item1")# 获取户型、建筑面积、单价、朝向、楼层、装修情况for re in resinfo:tmp = re.text.strip().split("\n")name = tmp[1].strip()if("朝向" in name):name = name.strip("进门")if("楼层" in name):name = name[0:2]if("地上层数" in name):name = "楼层"if("装修程度" in name):name = "装修"info[name] = tmp[0].strip()xiaoqu = soup.select(".rcont .blue")[0].text.strip()info["小区名字"] = xiaoquzongjia = soup.select(".tab-cont-right .trl-item")info["总价"] = zongjia[0].textreturn infodomain = "http://esf.anyang.fang.com/"city = "house/"#获取总页数def getTotalPage():res = req.get(domain+city+"i31")soup = BeautifulSoup(res.text, "html.parser")endPage = soup.select(".page_al a").pop()['href']pageNum = endPage.strip("/").split("/")[1].strip("i3")print("loading.....总共 "+pageNum+" 页数据.....")return pageNum# 分页爬取数据def pageFun(i):pageUrl = domain + city + "i3" +iprint(pageUrl+" loading...第 "+i+" 页数据.....")res = req.get(pageUrl)soup = BeautifulSoup(res.text,"html.parser")houses = soup.select(".shop_list dl")pageInfoList = []for house in houses:try:# print(domain + house.select("a")[0]['href'])info = getHouseInfo(domain + house.select("a")[0]['href'])pageInfoList.append(info)print(info)except Exception as e:print("---->出现异常,跳过 继续执行",e)df = pd.DataFrame(pageInfoList)return dfconnect = create_engine("mysql+pymysql://root:root@localhost:3306/houseinfo?charset=utf8")for i in range(1,int(getTotalPage())+1):try:df_onePage = pageFun(str(i))except Exception as e:print("Exception",e)pd.io.sql.to_sql(df_onePage, "city_house_price", connect, schema="houseinfo", if_exists="append")

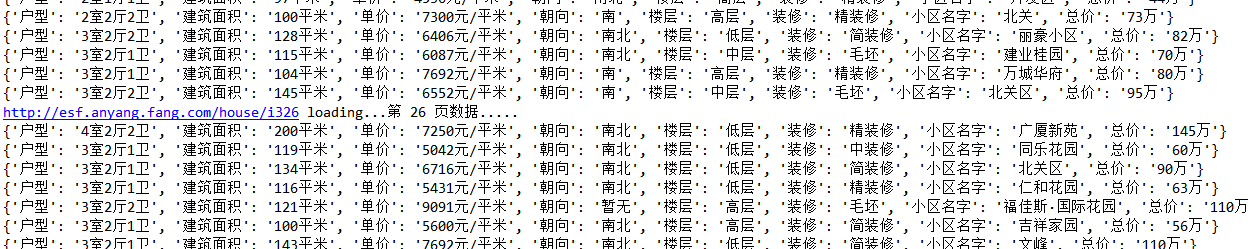

运行结果:

还没有评论,来说两句吧...